How to Ask Better Survey Questions – a Checklist

The success of a survey starts with question design — good questions are key to collecting feedback that’s actually useful. Improving your questions is one of the easiest, most cost-effective steps you can take to improve the quality of your survey data.

Every survey has different goals, so there’s no universal “right question” — but there are a few guidelines that will help you ask better questions, no matter what you’re asking about.

And from better questions come better data.

Why a checklist?

Sometimes we forget things. And this is even true for the most highly-trained professionals, like pilots and surgeons who are using checklists every day. They provide consistency, organization, and enhanced performance. Don’t take our word for it. Atul Gawande wrote a great book on the subject — The Checklist Manifesto.

The checklist:

Before publishing your survey, ask yourself — did you:

- Minimize the number of questions?

- Start with an easy-to-answer question?

- Ask more than one question focused on your key interest?

- Ask important questions as early as possible?

- Keep language neutral?

- Only address one subject per question?

- Check for and remove jargon?

- Check for and resolve confusing language?

- Randomize multiple choice questions?

- Consider writing questions in first person?

- Make sure answer choices are exhaustive?

- Make sure multiple choice answer options are mutually exclusive?

- Test your survey?

Let’s look more closely at each one.

1. Minimize the number of questions

One of your main goals in designing a survey is to maximize the response rate — if no one answers your questions, you have no data. People are less likely to answer a long questionnaire than a short one, and often pay less attention to questionnaires that seem long, monotonous, or boring ( common sense that’s been validated by research in the Journal of Clinical and Translational Science).

And low response rates negatively impact your data quality. According to Nielsen, low response rates create bias in the data, because only the most dedicated respondents will complete the surveys.

Try to make your surveys quick and painless. Ask yourself: Are all of the questions necessary? Respondents should only be asked questions that apply to them. (If you have questions that are only relevant to some respondents, filtering and branching can help you shorten your surveys.

2. Start with a question that’s easy to answer

You might have heard of the “foot in the door” principle: You can engage people to complete longer tasks by getting them to agree to do something simple first. It works for surveys, too. Start with a general, straightforward question that is easy for people to answer.

A basic question type like a yes/no, numerical rating, or simple multiple choice question is a good start. If you can make it fun, that’s even better!

3. Ask more than one question focused on your key interest

Keep your primary goal in mind as you write questions, and ask more than one that relates to it.

Why? Because no matter how clear you think your survey questions are, your customers might interpret them differently. Asking questions that approach your subjectfrom multiple angles ensures that you’ll have enough depth and context to analyse your data.

4. Ask important questions as early as possible

No matter how short and easy-to understand your survey is, not everyone will complete it. Questions that you ask early have a significantly higher chance of being answered, so ask your most important questions near the beginning.

But keep in mind that the order of questions matters. If an early question mentions a particular issue, respondents might be primed to think of it in relation to other questions. And some sensitive questions can trigger a higher drop off rate. It’s usually best to ask any sensitive questions, including asking for demographics information (especially income), near the end of the survey.

5. Keep language neutral

Remember, your goal is to get honest feedback from your subjects. The best questions are neutral — don’t influence people with leading questions that suggest the kind of response you want. Leading questions are great for proving your point of view or marketing goal, but not for getting accurate answers that help you learn. Even emotional or evocative language in a question could be considered “leading”.

Here are two simple examples for leading questions:

“How delicious would you describe our award-winning pizza?”

“Do you believe the US should immediately withdraw troops from the failed war in Iraq?”

6. Avoid double-barrelled questions.

‘What do you like best about our pizza and atmosphere?’

That’s a bad question. What if the person liked the pizza but found the atmosphere off-putting? What if they didn’t like either? This kind of question, called “double barreled,” prevents you from identifying specific products or service areas that need improvement. They are difficult to answer and often lead to responses that are difficult to interpret.

Ask one question at a time. A question that includes an “and” or an “or” is probably double-barreled.

7. Check for and avoid jargon

The terms you use among your staff might not be well-known by your customers. Jargon can confuse respondents and ultimately lower the quality of your data. Simple, concrete language is more easily understood by respondents. If people have to turn to Google to interpret your questions, you have a problem (and probably a lot of incomplete surveys).

8. Avoid confusing language.

Clear surveys aren’t just about avoiding industry jargon. You also need to be specific. If you ask someone to rate something on a scale of 1 to 5, is 1 the best rating or the worst?

Another way to confuse respondents is by with double negatives: “Do you not dislike anchovies on your pizza?” If someone answers “no” to this question, what does it mean? It probably took you a second to determine that it means they dislike anchovies.

Unclear and undefined terms can have a similar impact. “How important is it that a candidate shares your values?” If this question stands alone, is it clear about which values we are thinking here about? Specifying the term “values” would increase the quality of your responses significantly:. “How important is it that a candidate shares your religious values?”

9. Randomize multiple choice questions

In multiple choice questions participants tend to choose earlier options most often. Randomizing the order of your answer options for each respondent can minimize this effect.

10. Consider writing questions in the first person

It’s a subtle difference, but can lead to increase in participation: Questions in the first person helps people to imagine themselves in the situation and associate that experience with an answer, leading to quicker and more accurate responses.

Saying, ‘What I liked most about my pizza was ____’ also gives the survey responder more ownership over their answer than asking ‘What did you like most about your pizza?’

First-person questions nudge respondents to think about actual past experiences rather than just their opinions.

11. Make sure the answers to each question are exhaustive

Closed-ended questions should include all reasonable responses — that is, the list of options is exhaustive.

If you present someone with a closed set of answer choices but none of them apply to their situation, you’ll receive a low-quality answer because they’ll be forced to choose something that’s not quite true. Let’s say you ask respondents’ age range. Even if you’re only interested in answers from people between 20-44 years old, include answer choices like “19 or younger” and “45 or older.” You can filter your results later. What you don’t want are people choosing untrue answers — the “closest true” — because of a lack of alternatives.

You can also get around this by adding an “other” or “not applicable.”

12. Make sure your multiple choice answers are mutually exclusive

If you have a multiple choice question that allows people to choose just one answer option, ensure that the answer options are mutually exclusive.

What did you eat for breakfast?

- Pancakes

- Waffles

- Omelet

This isn’t mutually exclusive — more than one answer could reasonably apply.

What is your age?

- 0-20

- 20-30

- 30 or older

This isn’t mutually exclusive either — the choices overlap. Which option does a 20- or 30-year-old pick?

Here is an example for a mutually exclusive question:

What kind of breakfast did you have today?

- Completely vegetarian

- Not vegetarian

- N/A

If one answer is true, the others can’t also be true — a respondent can only make one choice.

13. Test your survey on a smaller group

Send your survey to a subset of your audience, or a test audience gathered online. This will tell you if people interpret your questions the way you intended, and can reveal blind spots that you can fix before distributing the survey to your entire audience

The American Association for Public Opinion Research notes that this is “indispensable” to ensuring that your survey elicits the kind of data you need.

If possible, be present for observing testers while they fill out the survey. Ask your participants to think aloud as they complete the survey to help you identify any interpretation issues or leading questions. And choose your testers wisely. Nielsen recommends “conduct[ing] at least one round of pilot testing with respondents from your demographic of interest — don’t rely just on your coworkers.”

That’s it. You might have a few more points to add to your checklist. In any case putting some up-front work into your questions can make your data and analysis much more reliable and useful. Going through a checklist like this before publishing your surveys will be worth it.

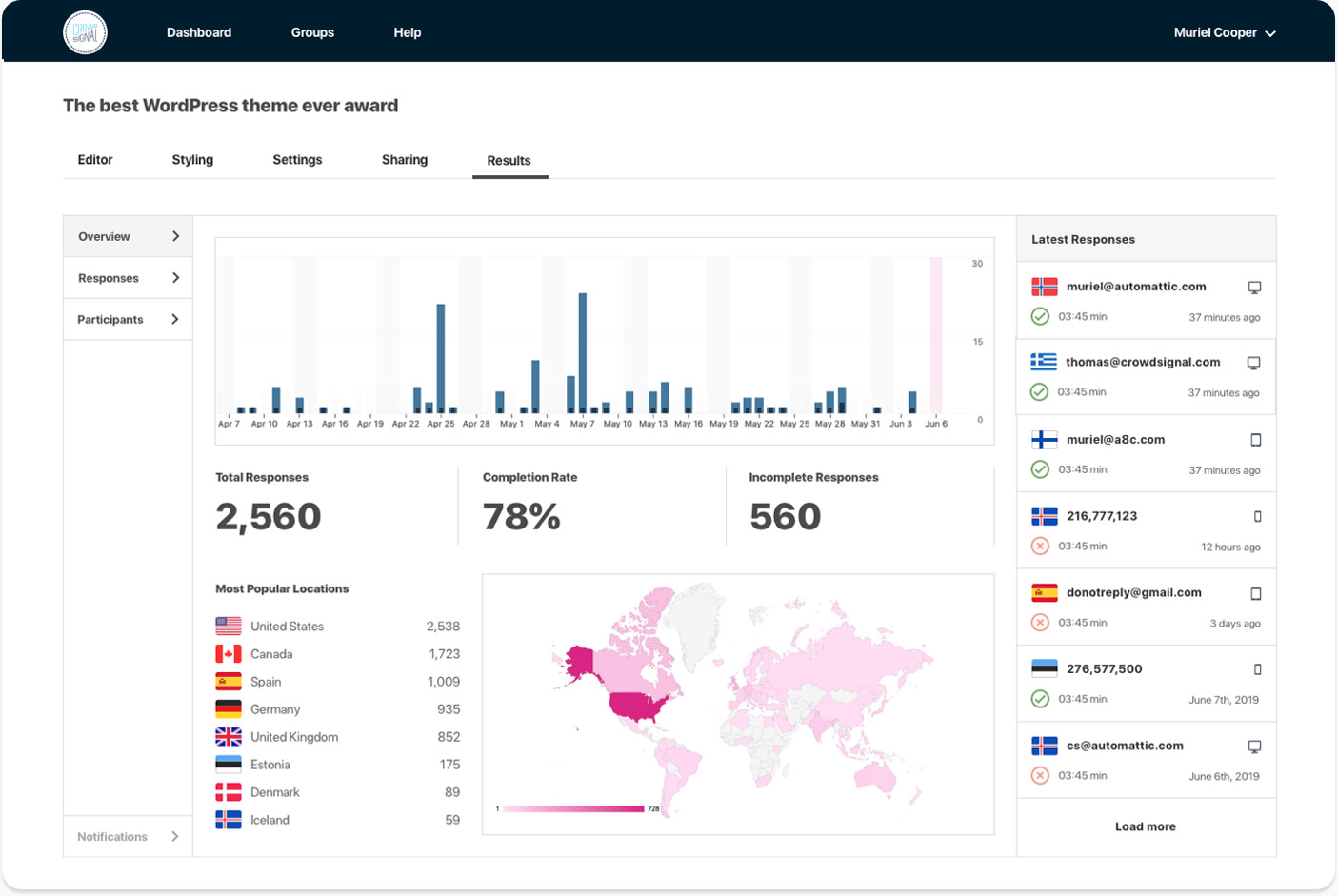

Use Crowdsignal to design your next survey.